Automatic Thai Finger Spelling Transcription

DOI:

https://doi.org/10.48048/wjst.2021.11233Keywords:

Sign Language Transcription, Sign Sequence Classification, Signing Video Transcription, Thai Finger Spelling TranscriptionAbstract

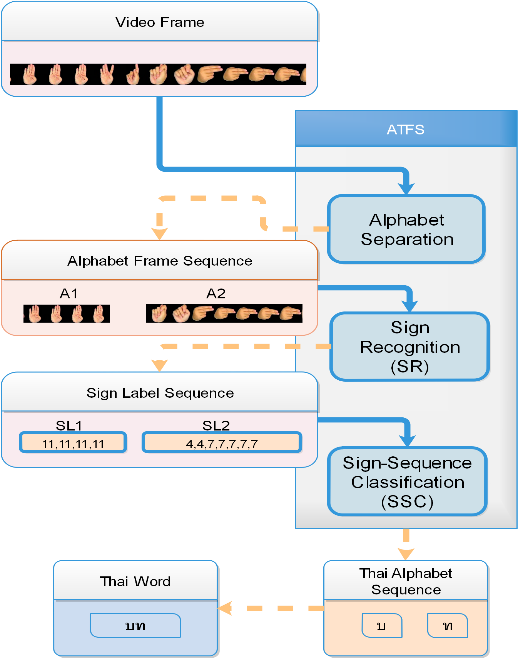

This article explores a transcription of a video recording Thai Finger Spelling (TFS)—a specific signing mode used in Thai sign language—to a corresponding Thai word. TFS copes with 42 Thai alphabets and 20 vowels using multiple and complex schemes. This leads to many technical challenges uncommon in spelling schemes of other sign languages. Our proposed system, Automatic Thai Finger Spelling Transcription (ATFS), processes a signing video in 3 stages: ALS marking video frames to easily remove any non-signing frame as well as conveniently group frames associating to the same alphabet, SR classifying a signing image frame to a sign label (or its equivalence), and SSR transcribing a series of signs into alphabets. ALS utilizes the TFS practice of signing different alphabets at different locations. SR and SSC employ well-adopted spatial and sequential models. Our ATFS has been found to achieve Alphabet Error Rate (AER) 0.256 (c.f. 0.63 of the baseline method). In addition to ATFS, our findings have disclosed a benefit of coupling image classification and sequence modeling stages by using a feature or penultimate vector for label representation rather than a definitive label or one-hot coding. Our results also assert the necessity of a smoothening mechanism in ALS and reveal a benefit of our proposed WFS, which could lead to over 15.88 % improvement. For TFS transcription, our work emphasizes the utilization of signing location in the identification of different alphabets. This is contrary to a common belief of exploiting signing time duration, which are shown to be ineffective by our data.

HIGHLIGHTS

- Prototype of Thai finger spelling transcription (transcribing a signing video to alphabets)

- Utilization of signing location as cue for identification of different alphabets

- Disclosure of a benefit of coupling image classification and sequence modeling in signing transcription

- Examination of various frame smoothing techniques and their contributions to the overall transcription performance

GRAPHICAL ABSTRACT

Downloads

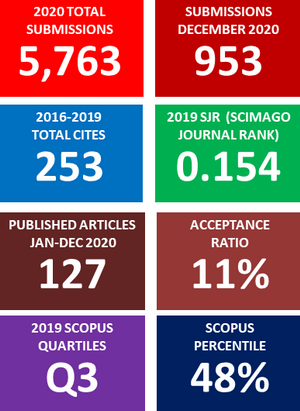

Metrics

References

C Oz and MC Leu. American sign language word recognition with a sensory glove using artificial neural networks. Eng. Appl. Artif. Intell. 2011; 24, 1204-13.

L Ding and AM Martinez. Modelling and recognition of the linguistic components in American Sign Language. Image Vis. Comput. 2009; 27, 1826-44.

M Hrúz, P Campr, E Dikici, AA Kındıroğlu, Z Krňoul, A Ronzhin, H Sak, D Schorno, H Yalçın, L Akarun, O Aran, A Karpov, M Saraçlar and M Železný. Automatic fingersign-to-speech translation system. J. Multimodal User Interfaces 2011; 4, 61-79.

AB Jmaa, W Mahdi, YB Jemaa and AB Hamadou. A new approach for hand gestures recognition based on depth map captured by RGB-D camera. Comput. Sist. 2016; 20, 709-21.

P Goh and EJ Holden. Dynamic fingerspelling recognition using geometric and motion features. In: Proceedings of the International Conference on Image Processing, Atlanta, GA, USA. 2006, p. 2741-44.

S Liwicki and M Everingham. Automatic recognition of fingerspelled words in british sign language. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Miami, FL, USA. 2009, p. 50-7.

P Nakjai and T Katanyukul. Hand sign recognition for thai finger spelling: An application of convolution neural network. J. Signal Process. Syst. 2019; 91, 131-46.

P Nakjai and T Katanyukul. Automatic hand sign recognition: Identify unusuality through latent cognizance. In: Proceedings of the Artificial Neural Networks in Pattern Recognition, Siena, Italy. 2018, p. 255-67.

A Laurent, S Meignier and P Deléglise. Improving recognition of proper nouns in ASR through generating and filtering phonetic transcriptions. Comput. Speech Lang. 2014; 28, 979-96.

P Nakjai, P Maneerat and T Katanyukul. Thai finger spelling localization and classification under complex background using a YOLO-based deep learning. In: Proceedings of the ACM International Conference Proceeding Series, Australia. 2019, p. 230-3.

J Redmon and A Farhadi. YOLO9000: Better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA. 2017, p. 6517-25.

K Simonyan and A Zisserman. Very deep convolutional networks for large-scale image recognition. In: Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA. 2015, p. 1-14.

A Sidig, H Luqman and S Mahmoud. Arabic sign language recognition using optical flow-based features and HMM. In: Proceedings of the International Conference of Reliable Information and Communication Technology, Johor Bahru, Malaysia. 2018, p. 297-305.

A Moryossef, I Tsochantaridis, R Aharoni, S Ebling and S Narayanan. Real-time sign language detection using human pose estimation. In: Proceedings of the European Conference on Computer Vision, Glasgow, UK. 2020, p. 237-48.

Z Cao, T Simon, SE Wei and Y Sheikh. Realtime multi-person 2D pose estimation using part affinity fields. In: Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA. 2017, p. 1302-10.

N El-Bendary, HM Zawbaa, MS Daoud, AE Hassanien and K Nakamatsu. ArSLAT: Arabic sign language alphabets translator. In: Proceedings of the International Conference on Computer Information Systems and Industrial Management Applications, Krakow, Poland. 2010, p. 590-5.

M Mohandes, S Aliyu and M Deriche. Prototype Arabic sign language recognition using multi-sensor data fusion of two leap motion controllers. In: Proceedings of the IEEE 12th International Multi-Conference on Systems, Signals & Devices, Mahdia, Tunisia. 2015, p. 1-6.

W Jiangqin and G Wen. The recognition of finger-spelling for Chinese sign language. In: Proceedings of the International Gesture Workshop, London, UK. 2001, p. 96-100.

N Mukai, N Harada and Y Chang. Japanese fingerspelling recognition based on classification tree and machine learning. In: Proceedings of the Nicograph International, Kyoto, Japan. 2017, p. 19-24.

HTC Machacon and S Shiga. Recognition of Japanese finger spelling gestures using neural networks. J. Med. Eng. Technol. 2010; 34, 254-60.

M Suwanarat and C Reilly. The Thai sign language dictionary. Thailand: National Association of the Deaf in Thailand, 1986.

D Guo, W Zhou, H Li and M Wang. Hierarchical LSTM for sign language translation. In: Proceedings of the 32nd AAAI conference on artificial intelligence, USA. 2018, p. 6845-52.

C Zhou, C Sun, Z Liu and FCM Lau. A c-lstm neural network for text classification. arXiv 2015. http://arxiv.org/abs/1511.08630.

I Sutskever, O Vinyals and QV Le. Sequence to sequence learning with neural networks. In: Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, Canada. 2014, p. 3104-12.

X Tian, J Zhang, Z Ma, Y He, J Wei, P Wu, W Situ, S Li and Y Zhang. Deep LSTM for large vocabulary continuous speech recognition. arXiv 2017. https://arxiv.org/abs/1703.07090.

N Laokulrat, S Phang, N Nishida, R Shu, Y Ehara, N Okazaki, Y Miyao and H Nakayama. Generating video description using sequence-to-sequence model with temporal attention. In: Proceedings of the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan. 2016, p. 44-52.

P Nakjai, J Ponsawat and T Katanyukul. Latent cognizance: What machine really learns. In: Proceedings of the 2nd International Conference on Artificial Intelligence and Pattern Recognition, Beijing, China. 2019, p. 164-9.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2021 Walailak University

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.